The second generation of the server is a natural evolution of the first. Most of the core components remain, but are rearranged into a more flexible and manageable model. This new model is designed to be easier to administrate, operate in a "server farm", and add new functionality. (closer to a typical component architecture)

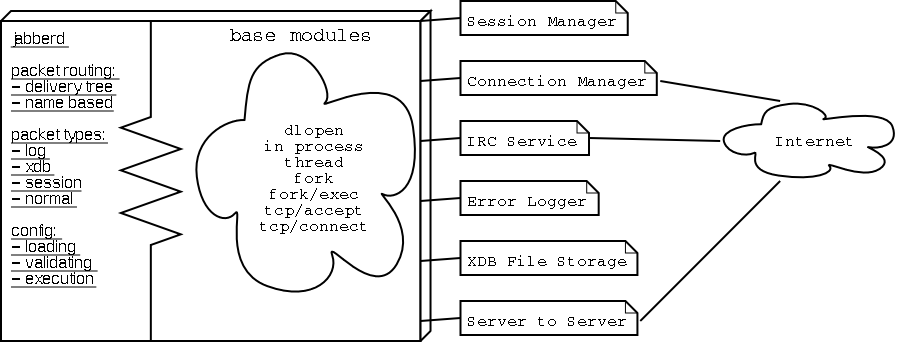

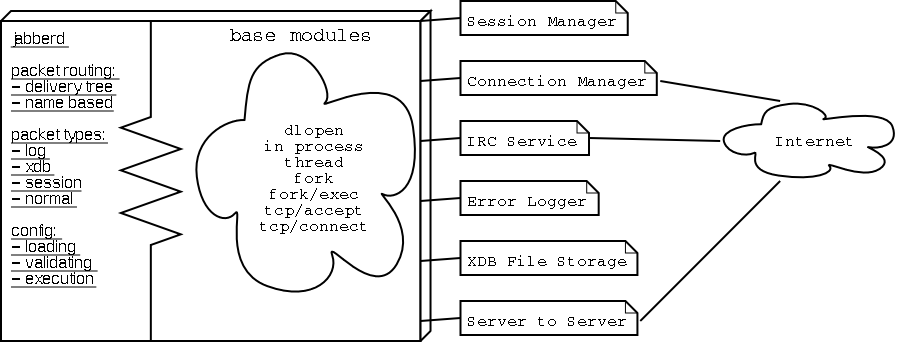

The architecture consists of an intelligent "jabberd" process, which just manages the config file and a packet delivery tree, and loads base modules which implement the packet handler functionality, and hook into the delivery tree to receive packets. Base modules include:

Below is a simple high-level graphic showing the Jabber II architecture:

Example simple config file

The development of Jabber II will be staged over at least three stable releases, 1.2, 1.4, and 2.0: 1.2 will be the base architecture shift with the socket scaling and farming enhancements, 1.4 will be moving to a better threading model (native, pthreads, mpm), 2.0 will be general stability and updates.

Details(ignore for now, just notes):

- extend jpackets, 4 types of routing

-- normal (from/to)

-- xdb:get="user", set, result

-- sid="jid" (use to route before to/from)

-- error logging requests

- each host/component

-- (tcp, other io) queueing, timeout, pings

-- xdb for config

- distrib

-- smart routing

-- broadcast to all (small scale)

- session manager

-- init for each host (for vhosting dso)

-- pass id tokens on calls

Delivery tree logic:

1: packet

|

2: xdb / log / session / normal

|

3: foo.org / isp.net / *

|

4: id="a" / id="b"

|

5: base_filter() / base_socket() / base_thread()

1: packet to deliver

2: branch based on type of packet

3: branch based on specific hostname or * catchs any

4: copy to each configured service for that hostname branch

5: call registered functions to handle or modify the packet until one does

examples:

incoming packet from a user for their session:

session >>> foo.org >>> id="foo.org" >>> base_thread()

incoming packet to a new server:

normal >>> * >>> id="server2server" >>> base_socket()

xdb request for a users roster:

xdb >>> isp.net >>> id="ldap" >>> base_thread()

error log entry from a service:

log >>> * >>> id="filelog" >>> base_file()

for many of the examples, there may have been multiple id="" (representing configured sections from the config file) matching that host entry, each would get a copy of the packet. after getting the packet, the section may have had further filters or restrictions based on something other than the name, which the registered functions handle.